This is 2nd part of the third post from the Data Manipulations with PowerShell series, where we are diving into the specific phase of data operations processes and apply/use PowerShell along the way.

Previous posts:

- Empower your Data Retrieval with PowerShell

- Make your Data Processing more efficient with PowerShell

- Data Transformation Made Easy with PowerShell – Part 1

In part 1 we covered 3 out of 7 data transformation types, this means that today we will focus on the remaining 4:

- Data Encoding

- Data Normalization

- Data Reduction

- Data Conversion

Data Encoding

As mentioned previously, there are 3 common data encoding techniques: label, binary and ordinal encodings.

Out of the Box

Out of the box provided cmdlets and scripts give us all that we need to perform data encoding activities. Specifically, Select-Object, ForEach-Object and Add-Member are very useful.

Label Encoding

Assigns a unique numeric label to each category in a categorical variable, basically map categories to their corresponding numeric labels.

In the following example, we will use “Salary” column and will create numeric labels for its values.

$category = $Dataset3 | Select-Object -ExpandProperty "Salary" -Unique

$labelMapping = @{}

$label = 1

foreach ($c in $category) {

$labelMapping[$c] = $label

$label++

}

foreach($r in $Dataset3){

$r | Add-Member -NotePropertyName "Encoded-Salary" -NotePropertyValue $labelMapping[$($r.Salary)]

}

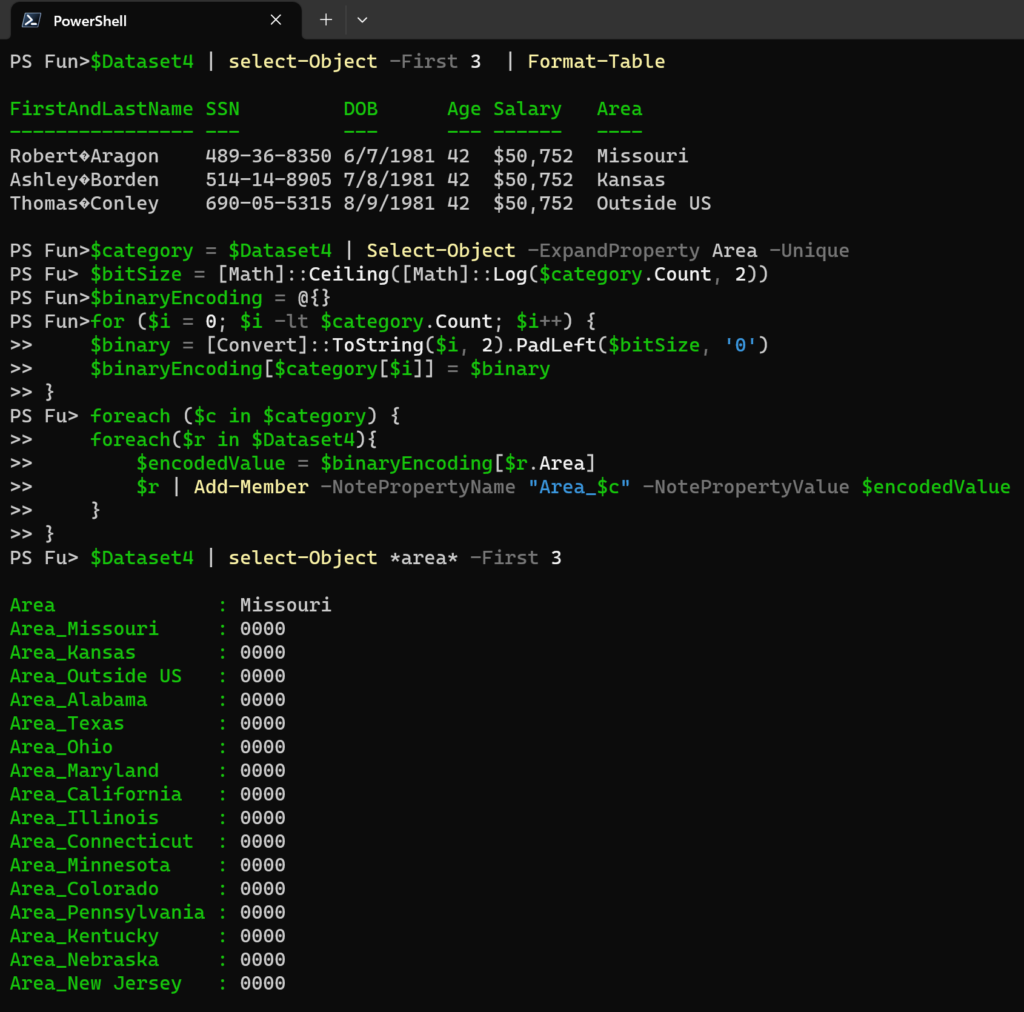

Binary Encoding

Idea here is to convert categorical variables into binary representations. So each category is encoded as a binary string, and each bit position represents the presence or absence of a particular category.

In the following example we will use this technique to the “Area” column.

$category = $Dataset4 | Select-Object -ExpandProperty Area -Unique

$bitSize = [Math]::Ceiling([Math]::Log($category.Count, 2))

$binaryEncoding = @{}

for ($i = 0; $i -lt $category.Count; $i++) {

$binary = [Convert]::ToString($i, 2).PadLeft($bitSize, '0')

$binaryEncoding[$category[$i]] = $binary

}

foreach ($c in $category) {

foreach($r in $Dataset4){

$encodedValue = $binaryEncoding[$r.Area]

$r | Add-Member -NotePropertyName "Area_$c" -NotePropertyValue $encodedValue

}

}

Ordinal Encoding

Using this technique, we assign a unique numeric label to each category based on their order or rank.

For the sake of consistency let’s use our “Area” column again.

$category = $Dataset4 | Select-Object -ExpandProperty "Area" -Unique

$category = $category | Sort-Object

$label = 1

$labelMapping = @{}

foreach ($c in $category) {

$labelMapping[$c] = $label

$label++

}

foreach($r in $Dataset4){

$encodedValue = $labelMapping[$r.Area]

$r | Add-Member -NotePropertyName "EncodedArea" -NotePropertyValue $encodedValue

}

Community Provided

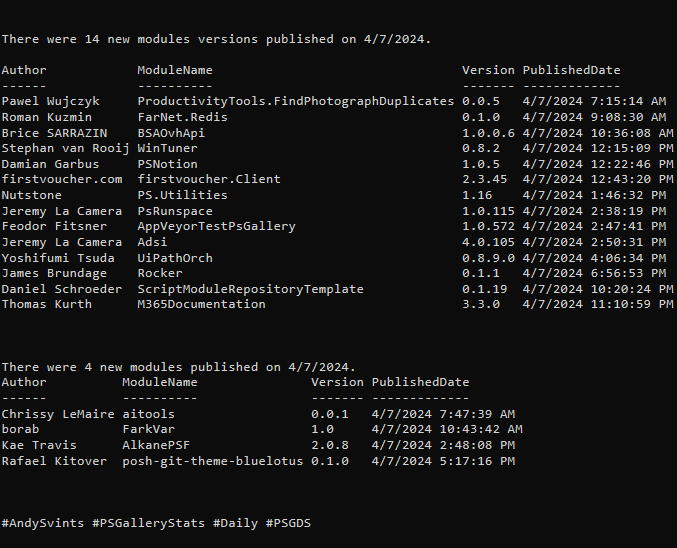

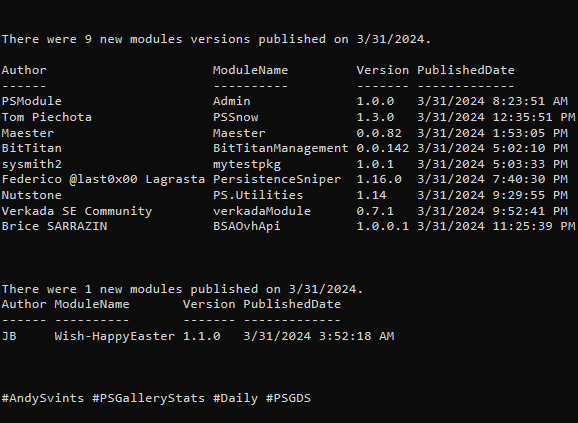

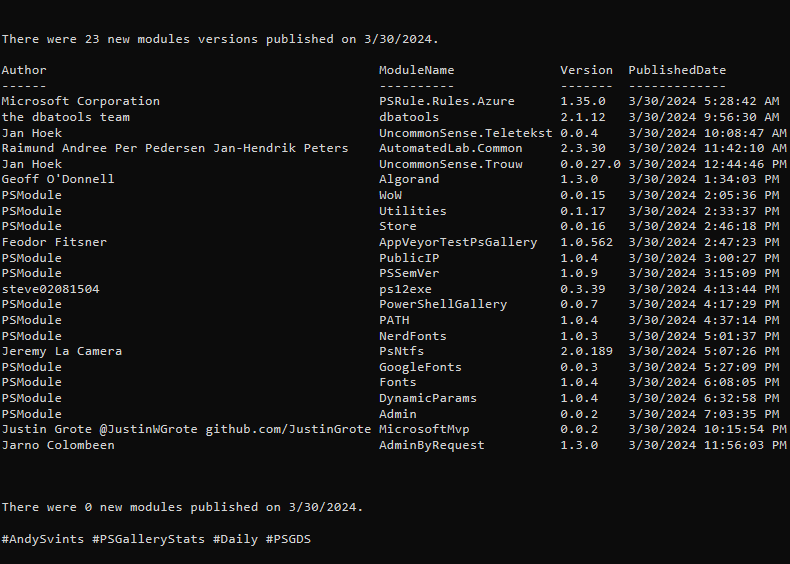

Number of modules with *encod* in the name or description – 42.

As always, I will highlight few of them:

- DataEncode by Rhys M – encode/decode all content within the CSV file.

- Base64String by Dmitry Stadub (aka stadub) – convert text from/to Base 64 String.

- SeeDatum by Marc Kassay (aka marckassay) – converts file contents or string into: decimal, binary, unicode and/or binary.

- Get-Base64RegularExpression by Lee Holmes – get a regular expression that can be used to search for content that has been Base64 encoded.

- ConvertFrom-GZipString by Cody Diehl – converts a GZipped and Base64 Encoded string into its original string content.

Data Normalization

In a nutshell, it is rescaling numeric data to a common scale. The most common normalization techniques are: min-max normalization, z-score normalization and decimal scaling.

Out of the Box

PowerShell provides all the needed functionalities, as the main thing here is basic math knowledge.

People who understand and know what the Data Normalization is and what techniques are available can apply math formulas even on paper and then PowerShell is a tool which helps to scale that formulas and apply to their data.

Min-max Normalization

The purpose of applying this technique is to scale the data to a fixed range, usually between 0 and 1.

Here is a formula:

Let’s look closer into this technique in the following example. We are going to normalize “Age” column.

$minValue = ($Dataset4 | Measure-Object -Property Age -Minimum).Minimum

$maxValue = ($Dataset4 | Measure-Object -Property Age -Maximum).Maximum

foreach($r in $Dataset4){

$normalizedValue = ($r.Age - $minValue) / ($maxValue - $minValue)

$r | Add-Member -NotePropertyName "NormalizedAge" -NotePropertyValue $normalizedValue

}

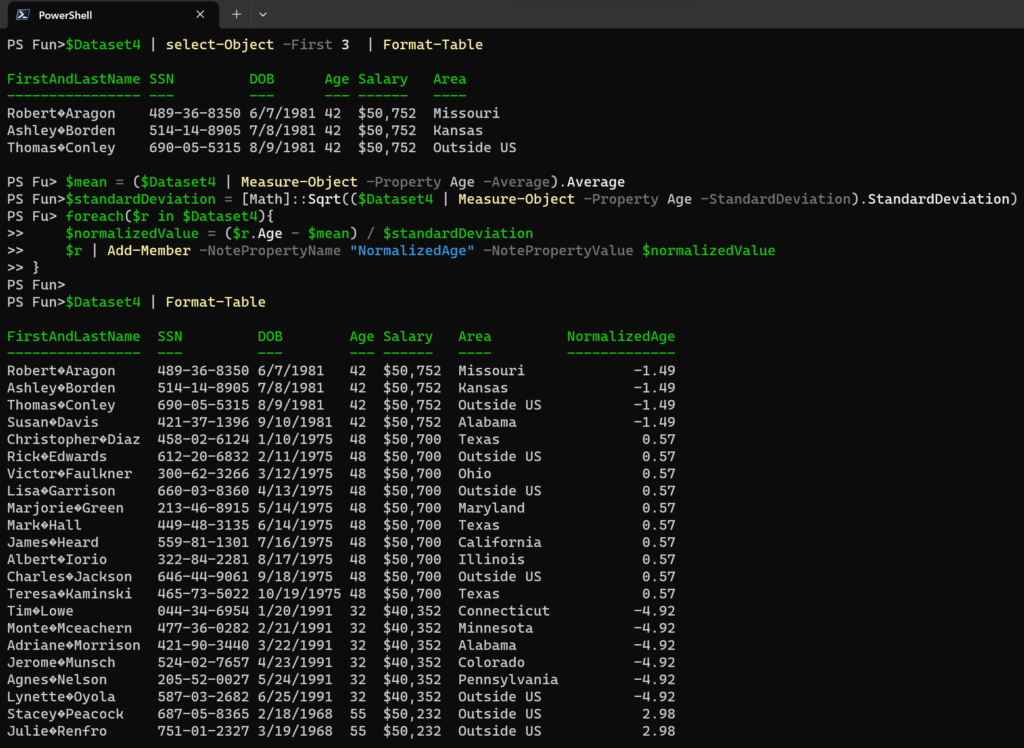

Z-score Normalization

This technique is used to transform the data to have a mean of 0 and a standard deviation of 1.

Here is the formula:

Now we are going to apply Z-score normalization to the “Age” (what a shocker, right) column:

$mean = ($Dataset4 | Measure-Object -Property Age -Average).Average

$standardDeviation = [Math]::Sqrt(($Dataset4 | Measure-Object -Property Age -StandardDeviation).StandardDeviation)

foreach($r in $Dataset4){

$normalizedValue = ($r.Age - $mean) / $standardDeviation

$r | Add-Member -NotePropertyName "NormalizedAge" -NotePropertyValue $normalizedValue

}

Decimal Scaling

Decimal scaling technique involves shifting the decimal point of the data to a specified position. It helps in reducing the range of values without losing the proportion between them.

Here is the formula:

Let’s see decimal scaling in action. We are going to apply it for the “Salary” column:

$maxValue = ($Dataset4 | Measure-Object -Property Salary -Maximum).Maximum

$scaleFactor = [Math]::Ceiling([Math]::Log10($maxValue))

foreach($r in $Dataset4){

$normalizedValue = $r.Salary / [Math]::Pow(10, $scaleFactor)

$r | Add-Member -NotePropertyName "NormalizedSalary" -NotePropertyValue $normalizedValue

}

Data Reduction

At the essence, this technique is used to eliminate redundant or irrelevant information, improve efficiency, and make data more manageable for future analysis.

Typically you would use one of the two available techniques: feature selection and feature extraction.

Out of the Box

One of the main cmdlets for data reduction is Select-Object. As you can guess, Select-Object is natively applicable for feature selection.

$Dataset4 | select-Object FirstAndLastName,DOB,Area,SSN

Community Provided

At the time of writing, PowerShell gallery do not contain any modules/scripts for data reduction.

Data Conversion

Data conversion can be applied to the dataset itself to convert it from one format to another, like CSV, JSON etc. Also it involves changing the data type of variables or columns in a dataset.

Out of the Box

Dataset

Out of the box we are presented with handful data conversion cmdlets.

Columns in Dataset

PowerShell provides built-in type conversion functions and operators to convert data between different types. The easiest way to convert data is to use casting operators. PowerShell contains [int], [double], [string], [bool] and [datetime]. To use them just prefix the value with casting operator and PowerShell will handle all the heavy lifting.

Here is an example:

$Dataset4 | ForEach-Object {

$_.DOB=[datetime]$_.DOB

$_.Age=[int]$_.Age

$_.Salary=[double]$_.Salary

}

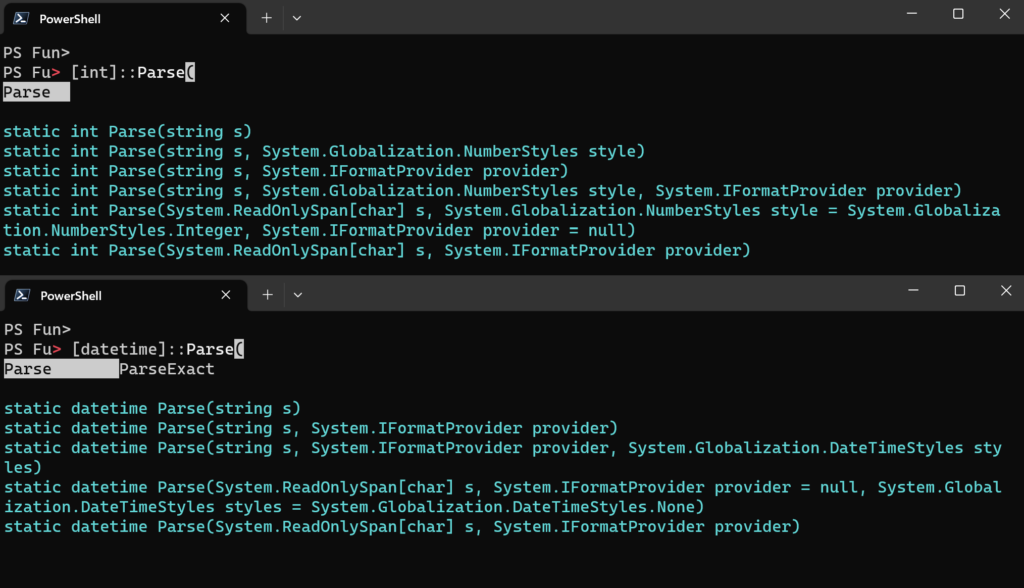

Also we can enhance casting with Parse methods:

In addition, with PowerShell we have access to the underlying .NET classes and methods that can be used for data conversion. Our main suspect is System.Convert.

Community Provided

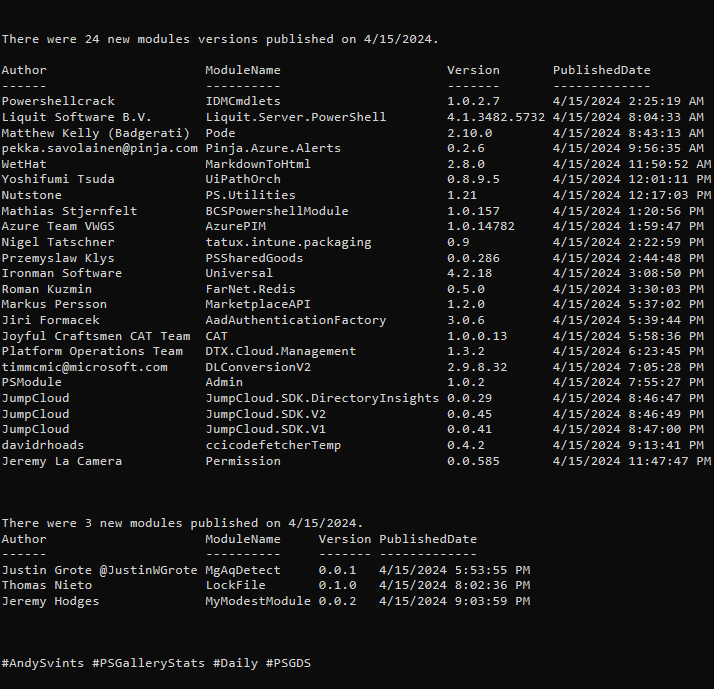

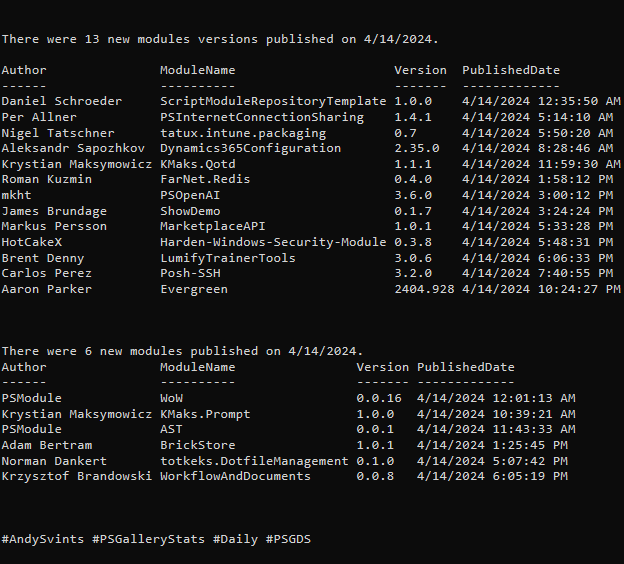

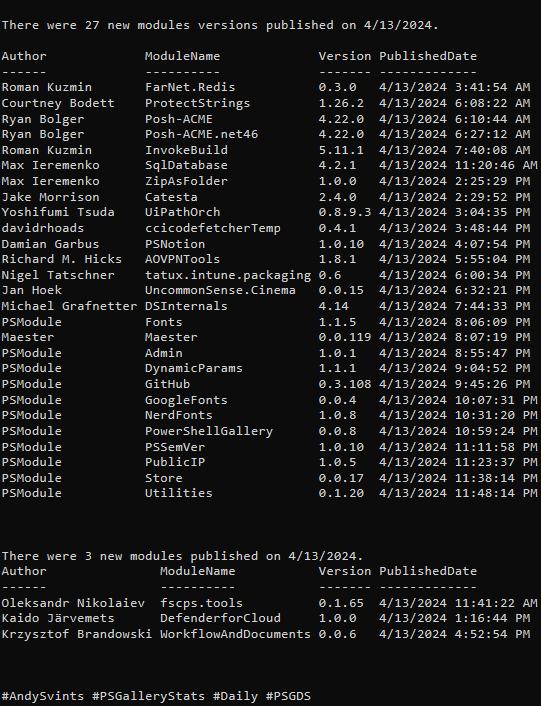

There are 1017 modules with *Convert* in name, description or tags.

I would like to mention few of them:

- Convert by Andrew Pearce (aka austoonz) – simplifies object conversions by exposing common requirements as PowerShell functions.

- powershell-yaml by Gabriel Adrian Samfira, Alessandro Pilotti – serializing and deserializing YAML.

- JWTDetails by Darren J Robinson – decode a JWT Access Token and convert to a PowerShell Object.

- Convert-PDF by Marcos Flavio Alves da Silva – convert pdf into string.

- ConvertFrom-JsonFast by William Easton – faster and more memory efficient implementation of ConvertFrom-Json.

- ConvertFrom-Json2XmlBulk by mattstark – recursively convert JSON files to XML files in bulk.

- MarkdownToHtml by Peter Ernst (aka WetHat) – convert Markdown files to static

HTML sites in various ways. - ConvertFrom-DataRow by Shannon Graybrook (aka realslacker) – convert System.Data.DataRow objects to OrderedDictionary or PSCustomObject.

- TranslitModule by Dima Stadub (aka stadub) – cyrillic/latin text transliteration.

- PowerShell.FileTransformations by HT – transforms, converts, and/or secures data files.

- PowerShellPivot by Douglas Finke (aka dfinke) – reshape data (produce a “pivot” table) based on column values. Supports aggregations.

Summary

In our messy and heterogeneous world there are a lot of tools for data transformation, and definitely PowerShell is not the first or even second choice. Nevertheless, PowerShell offers enough of features and functionality to cover your basic data transformation needs.

Main thing here is the knowledge of Data Transformation approaches and techniques and a decent PowerShellFu. It might seem that within PowerShell you are presented with limited resources (specifically for complex Data Transformation use cases), but (there is always “but”) these constraints can actually be a good thing and force you to be more creative. With PowerShell, less is often more. It may not be the go-to tool for data transformation but ( as I mentioned before there is always “but”) it is an adequate enhancement of your arsenal.

Next: PowerShell for Data Analysis: A Practical Guide to Extracting Insights from Your Data.

Data icons created by Freepik – Flaticon.

Thanks a lot for reading.