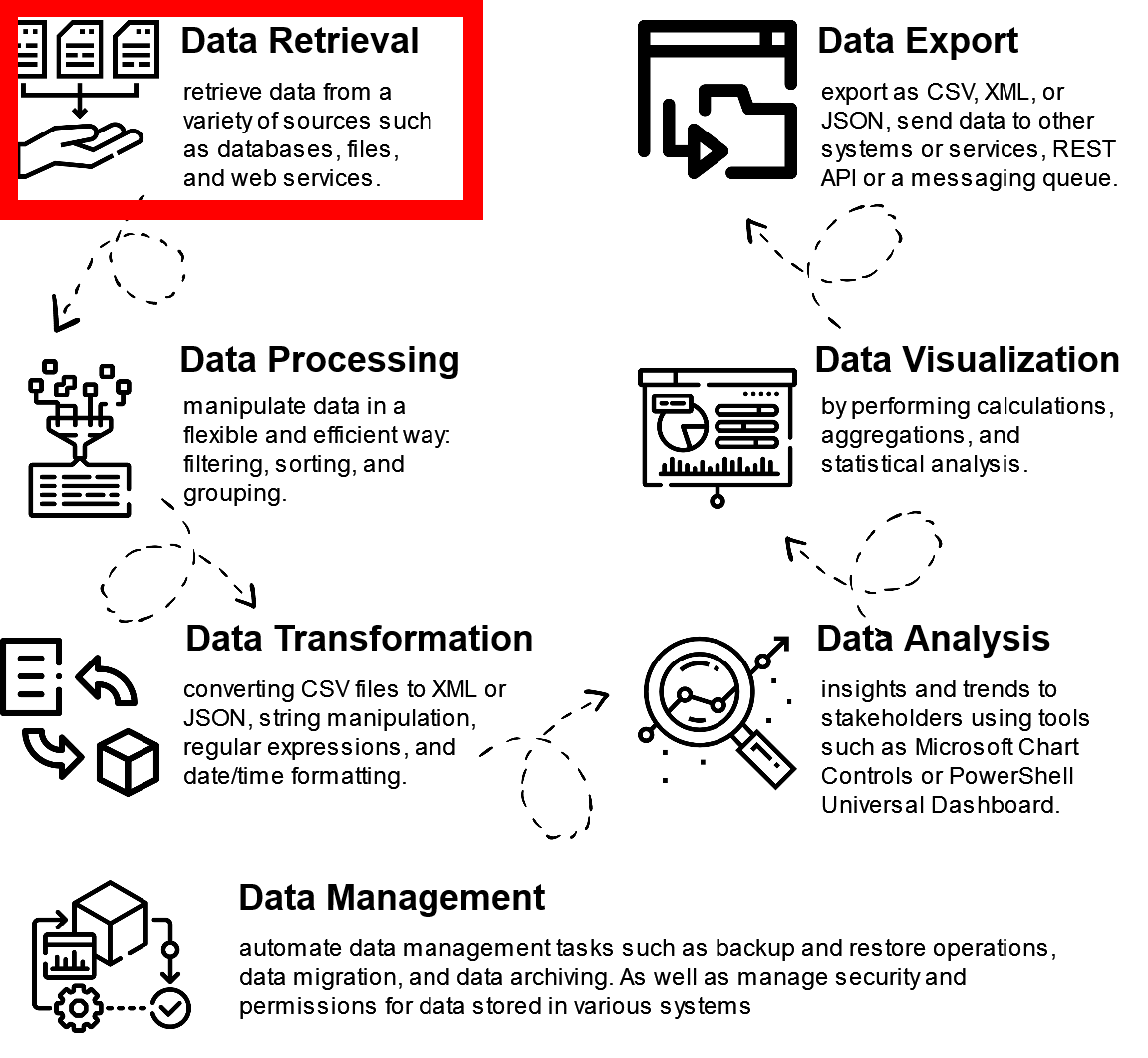

This is first post from the Data Manipulations with PowerShell series, where we are diving into the specific phase of data operations processes and apply/use PowerShell along the way.

This phase is crucial for the overall success, as the ease and speed of data gathering and ingesting into PowerShell will set up the tone for the future business value.

For starters, let’s look into the generic approaches and strategies to retrieve data.

- Files. Data can be stored in various file formats, such as CSV, JSON, or XML.

- Databases. Queries can be used to extract specific data from tables or join data from multiple tables.

- Websites. Web scraping involves parsing the HTML of a webpage to extract specific information.

- APIs. Application Programming Interfaces (APIs) allow software applications to communicate with each other and exchange data. APIs can be used to retrieve data from web services or cloud-based platforms.

The choice for retrieving data highly depends on the type of data, the source of the data, and the specific requirements of the project. For us it is much simpler, we assume that PowerShell is the only tool available for data collection and we try to use it all over.

For every data source we will see what out of the box cmdlets are available and then check any community created modules and scripts.

As you know, PowerShell Gallery is a default repository for all PowerShell modules. It contains modules developed and supported by Microsoft as well as by the marvelous PowerShell community. Community plays an important role as it creates an environment where enthusiasts, experts, and novices come together to collaborate and contribute. Although the original idea and concepts behind PowerShell Gallery are pure and noble, I personally find the not so pleasant side effects. But this is out of the scope of the current topic and maybe some time when I will have couple of minutes and some inspiration, I will write a blog post (most likely “rant”) about it.

Files

Files are pretty simple use case and PoweShell has quite a few cmdlets and ways to work with them.

File operations in PowerShell are controlled by the PowerShell FileSystem provider, which provides capability to get, add, change, clear, and delete files and directories.

For our specific data retrieval use case we do not care about file management, as we are focusing on identifying the needed files and retrieving its content.

Out of the Box

Out of the box capabilities are not that bad actually. PowerShell.Utility module (which contains 100+ cmdlets, please refer to Unleashing the Full Potential of the PowerShell.Utility Module for more details). provides at least 1 cmdlet for importing data from files: Import-CSV.

The syntax for using Import-Csv

Import-Csv -Path <path to CSV file>

The -Path

Another built in cmdlet for data retrieval – Get-Content, is part of PowerShell.Management module.

Get-Content is pretty universal as it reads raw file content as string and then you can leverage additional cmdlets to convert into the needed object type.

Content of the file is read one line at a time and returns a collection of objects, each of which represents a line of content. It returns a collection of string objects, each of which ends with an end-of-line character.

This cmdlets uses delimiter to divide the file into objects. The default is \n, the end-of-line character. If delimiter does not exist in the file, Get-Content returns the entire file as a single, undelimited object.

One of most typical examples is getting data from JSON files:

$Data=Get-Content data.json | ConvertFrom-JSON

There are other built in data transformation capabilities, please refer to Data Transformation Made Easy with PowerShell for more details.

Import-CSV and Get-Content cover the majority of data retrieval tasks from the files.

Community provided

Number of modules with *file* in the name – 164.

Number of modules that can be potentially applied to our use case – 39.

I would like to highlight some of them briefly:

- FileSplitter by Tobias – provides cmdlets to split a file in multiple parts, and to join parts to recreate the original file.

- ReadMsgFile by Christian Imhorst ( aka datenteiler) – ability to read Outlook MSG files without the need of Outlook client installed.

- Find-File by Claudio Zed – method for querying the Windows Search Index Database over simple command line arguments.

- Get-OneFileFromZip by Roy Ashbrook – help to extract one file from a zip.

- NPSLogFile by Andreas Bellstedt (aka AndiBellstedt) – parsing nps/ias log files.

- Read-ExcelFile by Pascal Rimark (aka Alcapsulator) – get data from Excel file without Excel installation.

- Find-InFiles by Phillip Beazley – finds a string within files within the given locations and/or files with names containing that string.

- Get-StringfromFiles by Ishan Shukla (aka isshukla) – search for the given string in all the files withing the given Directory.

I would strongly recommend exploring and playing (of course in dev env) with modules from PS Gallery.

Next let’s look into the ways/options to get data from Databases.

Databases

In our heterogeneous world there are a lot of different database types and as far as I am aware, we have at least 8 different types. They are: relational (the classics), non-relational aka NoSQL (rebel for the traditional data storage approach), Graph (distinctive and innovative approach), Columnar (niche, kind of specialized use cases), Document db (versatile and flexible for unstructured and semi-structured data), Time-Series (purpose-built for time stamped data), Key-Value Stores (simple and efficient), In-Memory Databases (the “Flash” like performance).

Out of the box

Because PowerShell is the Microsoft product first and foremost we are going to look into Microsoft SQL.

There are 2 official PowerShell SQL modules: SQLPS and SQLServer.

For our use case SQLPS is not helpful as it revolves around SQL server management & administration, not retrieving data from the tables.

SQLServer module on the other hand have at least 2 cmdlets that will help us to fulfill the task of data retrieval: Read-SqlTableData & Read-SqlViewData.

Pretty self-explanatory, these 2 cmdlets help to get data from SQL table and SQL table view respectively.

Read-SqlTableData -ServerInstance "SQLSVR-01\PRDINST" -DatabaseName "Inventory" -SchemaName "dbo" -TableName "Assets" -TopN 4

Read-SqlViewData -ServerInstance " SQLSVR-01\PRDINST " -DatabaseName "Inventory" -SchemaName "dbo" -ViewName "DecommissionedAssets" -TopN 2

Being a part of .NET PowerShell has access to all the .NET classes and assemblies both built-in and custom one. Access to Databases can be done using specific DLL and its methods.

For example Oracle (using Oracle.DataAccess.Client):

Add-Type -Path "C:\path\to\Oracle.DataAccess.dll"

$connectionString = "Data Source=your_data_source;User Id=your_username;Password=your_password;"

$connection = New-Object Oracle.DataAccess.Client.OracleConnection($connectionString)

$connection.Open()

$query = "SELECT * FROM Sales"

$command = New-Object Oracle.DataAccess.Client.OracleCommand($query, $connection)

$reader = $command.ExecuteReader()

while ($reader.Read()) {

$column1Value = $reader["Column1Name"]

$column2Value = $reader["Column2Name"]

# Process the values as needed

}

$reader.Close()

$connection.Close()

The overall approach to connect to other Database providers is pretty similar, just use the appropriate namespaces and connection strings for the specific database provider you’re working with.

Community provided

Number of modules with *sql* and *database* in the name – 154.

Number of modules that can be potentially applied to our use case – 141.

I would like to highlight some of them briefly:

- SimplySql by Mithrandyr – querying SQL (SQL Server, Oracle, PostgreSql, SQLite, & mySql) the PowerShell way: simple commands… powerful opportunities.

- SQLHelper by Rui Romano (aka DevScope) – collection of powerfull but simple powershell cmdlets for working with SQL databases.

- PPoShSqlTools by PPoshGroup – little pack of idempotent sql functions for Powershell that don’t require SQLPS, SQLCMD or any other components of SQL Server.

- SqlQueryHelper by Saw-Friendship – cmdLets designed to facilitate the effortless generation of an SQL query.

- SqlDatabase by Max Ieremenko – module for MSSQL Server, PostgreSQL and MySQL allows to execute scripts, database migrations and export data.

- PSCommonSql by Chris Duck (aka gpduck) – module for working with SQL databases using the System.Data.Common abstraction layer.

- sqlClientPS by Medius Research and Development AB – module to execute async sql queries.

- OledbSql by Nathan Hartley (aka treestryder ) – simple means to connect to any OleDB compatible database and execute SQL queries, returning the results as piped objects.

- PsSqlClient by Steffen Kampmann (aka abbgrade ) – replaces the SQL Server utilities SQLCMD and BCP with native PowerShell commands.

- SQLExtensions by gogbg – helps Invoking SQL queries without having the SQL Management tools

- mySQLite by Jeff Hicks (aka jdhitsolutions ) – set of PowerShell commands for working with SQLite database files, will only work on Windows platforms.

- ProductivityTools.SelectSQLView by Pawel Wujczyk – quickly select view or table from SQL Server.

- SQLiteModule by Christian Imhorst (aka datenteiler) – Invoke a SQLite query to SQLite database

- ReallySimpleDatabase by Dr. Tobias Weltner (aka TobiasPSP ) – SQLite database wrapper (comes with everything you need to start working with SQLite databases including the database engine. No dependencies. No prerequisites.)

And again, I would encourage you to explore PowerShell Gallery more at your own pace.

Websites

To put it simply, websites are the pages that are accessible via internet. They serve various purposes, including providing information, offering services, selling products, facilitating communication, and entertainment. Also, we, human beings, are using websites as the main vehicle to consume data. Web scraping on the other hand is the process of extracting data from websites automatically. The main goal of web scraping is to retrieve information and convert it into a structured format that can be analyzed or stored elsewhere. Although information published on the websites is considered public and anyone can freely access and read it, to scrape the data and use it for your own purposes is a little bit shady.

Web scraping itself is not illegal, its legality depends on the website’s terms of service and the purpose for which the data is being scraped.

Out of the box

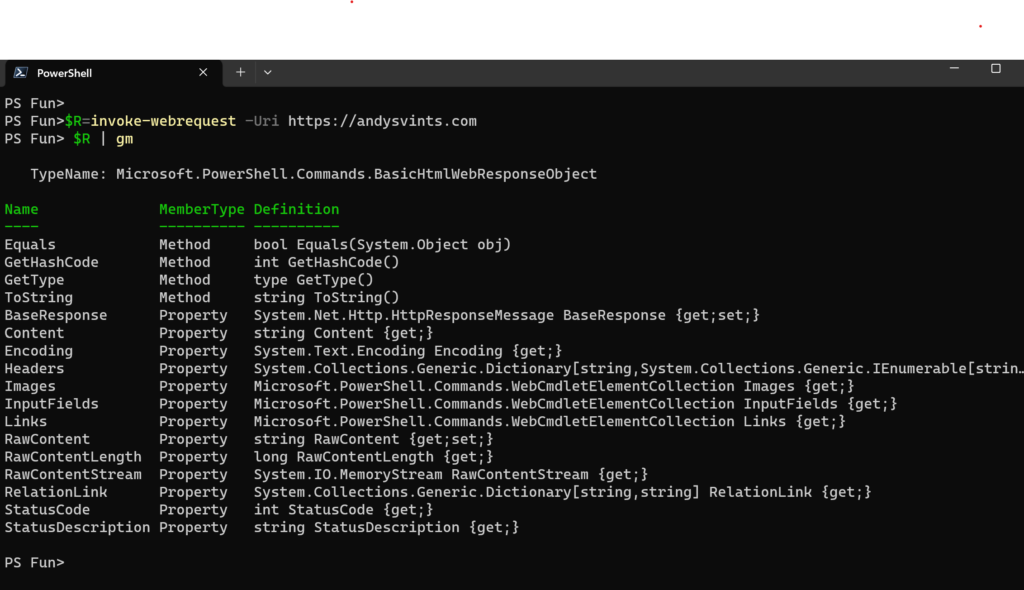

PowerShell.Utility module equips us with elegant Invoke-WebRequest cmdlet. In PowerShell universe it is commonly used for web scraping tasks.

$response = Invoke-WebRequest -Uri "https://andysvints.com"

By default, Invoke-WebRequest will not load dynamically generated content. You should use –UseBasicParsing to fetch all content (including dynamic one) from the page.

In addition, this versatile cmdlet allows you to include headers and cookies in your HTTP requests, which can be useful for scenarios where authentication or session handling is required.

$headers = @{

"User-Agent" = " Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/112.0.0.0 Safari/537.36 Edg/112.0.1722.58"

}

$response = Invoke-WebRequest -Uri "https://andysvints.com" -Headers $headers

Once HTML response has been retrieved, the next phase it to parse and transform the data.

Community provided

Number of modules with *scra* in the name – 4.

Number of modules that can be potentially applied to our use case – 2.

As a tradition already, I would like to highlight some of them briefly:

- Scraperion by Wil Taylor ( aka wiltaylor )– allow automation and scraping of both web page and the screen.

- PSSiteScraper by The Oh Brothers – cmdlets for scraping a site.

APIs

Application Programming Interface (API) is a set of rules and protocols that allows different software applications to communicate and interact with each other. It is a critical piece that enable the integration and interoperability of various applications and services.

Nowadays, in the era of API-first development all the products and services are built around the APIs. API is the primary building block, and all the features, functionality and integrations are designed and architected around it. This approach promotes decoupling, collaboration, and scalability.

PowerShell provides built in Invoke-RestMethod cmdlet to interact with RESTful APIs. It can send HTTP requests to API endpoints and retrieve the response. You can specify the HTTP method, headers, authentication, and body content in the request.

$response = Invoke-RestMethod -Uri "https://www.andysvints.com/feed/" -Method GET -Headers $headers -Body $body -ContentType "application/json"

The breadth of APIs available across industries, services, and platforms means that there are countless possibilities for leveraging their capabilities within PowerShell. Usage scenarios for APIs within PowerShell are virtually limitless, so it definitely deserves a dedicated blog post (some time in future =P ).

To sum up, PowerShell is very flexible and versatile tool. It can be easily applied to multiple use cases and act as very powerful tool in your toolbox. As for our Data retrieval use case, I think PowerShell is an awesome instrument even with out of the box functionality it got you covered. If you need more functionality and features, tap into the PowerShell gallery to extend the applicability tenfold.

Next: Make your Data Processing more efficient with PowerShell

Data icons created by Freepik – Flaticon

Thanks a lot for reading.